Architecture

Case Studies

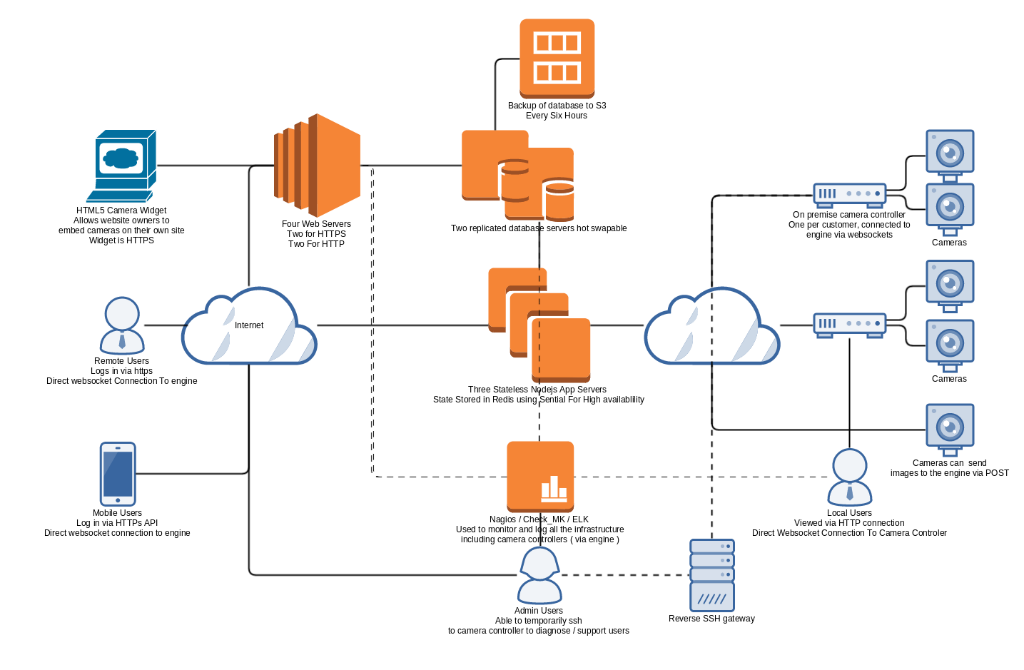

Agricamera

Agricamera is a start-up competing in the CCTV industry. The unique value proposition is that compared to other CCTV providers their solution requires no third party intervention and "just works".

In order to achieve this goal the architecture needed to tackle the following challenges:

- Communicate with cameras behind home routers without the need for customers needing to log on to the router

- Allow support staff to diagnose networking problems

- Platform must be available at all times

- Customer must have best possible view of camera depending on where they current are, local network / remote viewing

- Cameras must be accessible via the widest range of devices

- Quickly scalable as customer base grows

The bulk of the application is cloud basz , with individual camera controllers running on Agricamera's customer networks. Every server is replicated more than one and high availability considered at all points. The application was designed to be internet based from the outset, differentiating Agricamera from most of its competitors.

A single board computer is sold to each customer which sits on their network. The board is programmed to automatically discover cameras by scanning for appropriate mac addresses and then registering the cameras with the application servers via a web socket connection. This prevents the need to alter/configure their routers to allow remote access to their cameras.

There are two methods by which the user can access their cameras. A web-based browser application, and a mobile application. The web-based application is written in PHP using require and knockout js. The mobile application is built using the Ionic Framework and Angular.js which allow rapid development across the largest number of devices whilst keeping one shared code base.

Both the desktop and mobile applications are smart enough to work out whether the cameras are available on the local network and will pick the appropriate connection method depending on where the user is. Agricamera's admin staff are able to configure different resolution streams on a per connection and per device method. This ensures that the customers are always getting the best possible viewing experience from the platform.

Each node application server runs on a single core, with Nginx used to balance the load between each of them. Additionally, traffic is balanced via Nginx for the web servers and the Nginx daemon running on the engines. This allows both vertical and horizontal scaling of engine servers. This is used to full effect when Agricamera's platform is used to stream popular events, such as sheep shearing world records.

The entire software platform is automatically monitored using Check MK and Nagios. Thorough logging via ElasticSearch/LogStash and Kibana allow quick resolutions of support queries and technical issues as they arise. Every server in the system is reproducible via Ansible.

At the time of writing the application is managing over 100 permanently connected camera controllers with over 300 cameras streaming in real time.

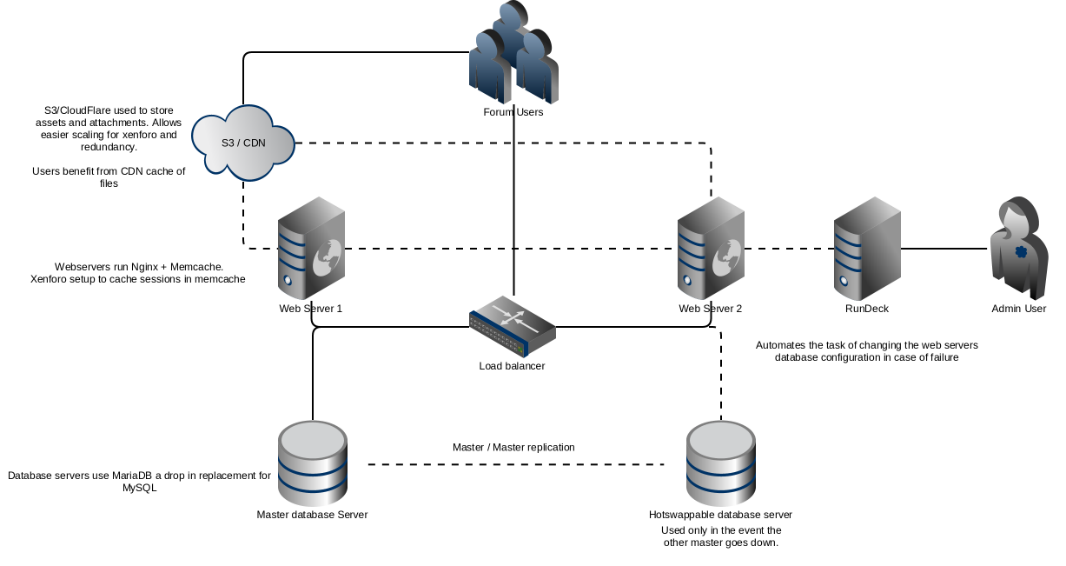

TheFarmingForum

The Farming Forum was gaining popularity and quickly entering a level where the risks of running the forum single on a server were no longer appropriate for the business.

Xenforo is not designed to run on multiple servers out of the box and therefore understanding how the existing system worked was crucial to the design of any other server. Assets were the big problem to solve as in order to move from one server to multiple, uploads and attachments created by forum members would need to be moved into the cloud.

Plugins were found for Xenforo which automated the process of assets being uploaded to the cloud. A custom bash script using S3cmd was written which would transfer the existing assets into S3 and update the Xenforo database as appropriate.

High availability would be achieved by utilising Linodes Nodebalancers. A single Nodebalancer would sit in front of the web servers and round robin the traffic between them. Memcached was chosen to store the sessions information and the Xenforo software adapted to write all session information to each Memcached instance.

The database would be run in Master / Master mode with only one database being connected to at any point by the Xenforo software. Monitoring had shown that the biggest bottleneck in the application was CPU on the web servers and therefore scaling the database was not a priority. A Rundeck task would be created that would allow the master database server to swap over to the slave in case of downtime.

The-Hpo.co.uk

The HPO came to me looking for help updating a critical legacy application to something more robust, faster and reliable.

By looking and understanding the existing system and how it interacted we were able to build a new solution which provided all the functionality of the previous system and satisfied all the criteria of above.

The new system would comprise of five layers:

- An API to interact with the relational database

- A new thin client Javascript front end client

- A data warehouse which would store data in third normal form

- An Extract Transform Load application to perform the data integration

- A reporting engine to sit in front of the data warehouse to render the data in a meaningful way

The application would reside entirely in the cloud. A single instance would run all the software together to keep costs low and the architecture simple. Thought was given as to how this could be split in the future,however, this design best fit the customer's needs at the present time. PHP and Javascript frameworks were chosen based on the clients existing experience with these technologies.

The ETL engine would be built using the Apache Camel Library. Camel supports a number of enterprise integration patters which were well suited to this project. Camel supports being able to write custom processors in Java and this was used to handle the more complex data transformations that were needed to implement HPO's reporting algorithms.

SendGrid would be used to handle the emailing as this gave the customer far more visibility over emails that were sent from the reporting engine than before and would reduce what was a large contributor to the support overheads the customer was having. This also saved on the development time of the final application.